Compute servers in the department

Currently, there are two compute servers. This page gives some pointers on how to use them. The computational-load status of the servers is available here.

Setup and SSH keys

You should have a .bash_profile and .bashrc file in your home directory for setting environment variables every time you log on (or similar for other shell types). If you need inspiration, you can look at /home/bovy/.bash_profile and /home/bovy/.bashrc. To avoid backend issues with matplotlib, you should have a file .matplotlib/matplotlibrc in your home directory that sets the backend to Agg. You can download a sample file here and change the backend line to use Agg.

To log on and use some of the other functionality, you should set up an SSH key, such that you can log on without having to type in your password. On your home computer (for example, your laptop which you use to log onto the compute servers or your office computer) do

$ ssh-keygen -t rsa

to generate a key. Use a long passphrase that you will remember. On the compute server you should create a .ssh directory in your home directory

$ mkdir .ssh $ chmod 700 .ssh $ cd .ssh

and create an authorized_keys file

$ touch authorized_keys $ chmod 600 authorized_keys

Log out and copy your public key to the compute server

$ cat ~/.ssh/id_rsa.pub | ssh -l USERNAME SERVER.astro.utoronto.ca 'sh -c "cat - >> ~/.ssh/authorized_keys"'

You will also want to use a .ssh/config file on your home computer to make login in easier. This file has entries such as

Host NICKNAME

Hostname SERVER.astro.utoronto.ca

User USERNAME

ForwardAgent yes

Then you can login simply as

$ ssh NICKNAME

On a Mac Keychain will remember your key’s passphrase, so you won’t have to type it constantly. On Linux there are similar programs.

Installing software

You should install any software locally, including packages such as galpy and apogee. All of the users of this server might be using different (development) versions of this and other software, so it’s easiest if everybody installs locally as much as is practical. Create a local/ directory in your home directory

$ cd $ mkdir local

and then install software to that directory. Make sure to add your local/bin directory to your path (export PATH=$HOME/local/bin:$PATH in your .bash_profile).For compiled programs this means that you typically do

$ ./configure --prefix=~/local ...

For Python it is recommended that you install Miniconda and manage your own Python installation, rather than using the Python version provided by the OS (which is difficult to keep up-to-date).

Installing FERRE with the apogee package locally doesn’t work (the installer can’t figure out to copy the necessary binaries to $HOME/local/bin), so to install this you need to run the installation with --install-ferre, let it fail, and then copy the ferre and ascii2bin binaries to $HOME/local/bin yourself (and make them executable with chmod u+x ~/local/bin/ferre and chmod u+x ~/local/bin/ascii2bin.

Running NEMO

NEMO is installed on the server. To be able to use it, you can add the following line to your

.bash_profile or .bashrc

if [ -z "$NEMO" ]; then source ~bovy/Repos/nemo/nemo_start.sh; fi

You can also install NEMO locally yourself and then probably want to start from this GitHub version.

Running iPython/Jupyter notebooks/lab

You can run an iPython notebook on the server while manipulating and displaying it on your home computer using the following steps. First, login to the compute server and start a notebook server on some port PORT (PORT should be something like 8889, but choose something different to avoid overlap with other users)

$ jupyter notebook --no-browser --port=PORT

or for Jupyter lab

$ jupyter lab --no-browser --port=PORT

Check that you don’t get an error message that the PORT is already in use

On your local, home computer open an SSH tunnel as follows

$ ssh -N -L localhost:8888:localhost:PORT NICKNAME

where NICKNAME is the same ssh shortcut as above. If you have an IPython notebook running locally you need to use a different local port (not 8888, because that will be used by the local notebook). Then open a tab in your browser and navigate to

localhost:8888

which displays the notebook server that is running remotely. To close the SSH tunnel, just terminate the SSH tunnel running process. You can start the remote notebook in a UNIX screen session. This way you can log out while detaching the screen (thus keeping the process running remotely) and this also safeguards you against losing the connection to the remote server while you are working in the notebook. Note that computations in the notebook will only remain running when you are connected to the notebook using your browser (however, the kernel keeps running if you are disconnected, so when you reconnect, the notebook will still have all of the variables etc. that you defined). Therefore, do not use this to run long computations.

Adding users

Adding users is easily done by

$ sudo adduser NEW_USERNAME

which will ask for a new password which you can create using a service like RANDOM.ORG. This password should be immediately changed by the new user. Make a scratch directory and change the ownership to the new user with

$ sudo chown -R NEW_USERNAME NEW_USERNAME

The above only creates the account on one server. To make the same account on all servers, add the new user using useradd on each new after they setup their password (don’t use adduser, just useradd). Then edit /etc/passwd and /etc/group such that it is the same, and copy the entry between the first colons in /etc/shadow (note that you might have to make /etc/shadow writeable first).

To allow a user to use Docker, add them to the docker group

$ sudo usermod -aG docker NEW_USERNAME

To delete a user do

$ sudo deluser --remove-home NEW_USERNAME

on all servers. Also remove the scratch directory.

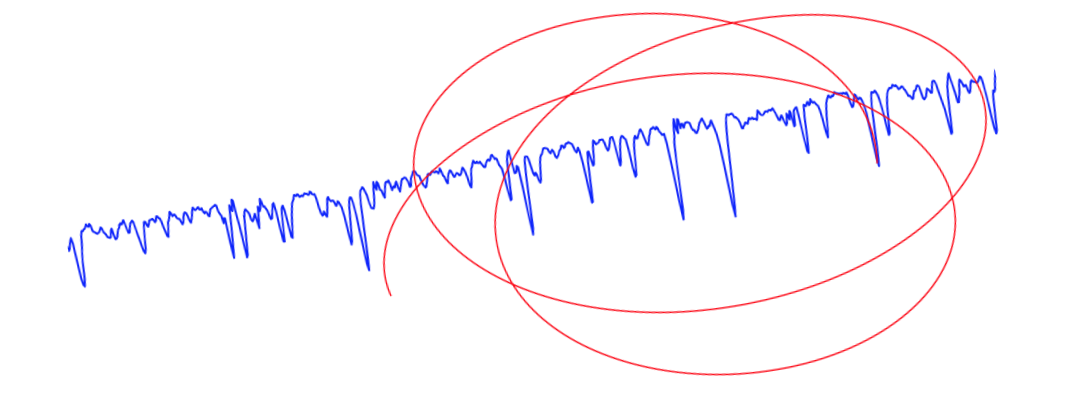

The server status page

The server status page is automatically generated, but might need a bit of care to keep going or restart. Essentially, a script exists in $HOME/monitor/monitor.sh that needs to be run on each server. This script uses data collected by sysstat, creates a graph, and copies it to the webserer, where it is then automatically sorted by reverse data and displayed.

To make sure sysstat is running do

sudo systemctl start sysstat

To make sure sysstat is started whenever the server restarts, do

sudo systemctl enable sysstat

To check the status, do

systemctl status sysstat

and to specifically check that the cron job is running, do

systemctl list-timers | grep sysstat

If this is all working, then the data is being collected. All that is then left to is to make sure that the monitor.sh script is run automatically, so add to the crontab

*/10 * * * * /home/bovy/monitor/monitor.sh > /home/bovy/monitor/monitor_SERVER.log 2>&1

where SERVER is the server’s name. Note that everything in this section needs to be run on each server!